Protecting the Human: Why the EU AI Act is a Good Move

The other day, I was talking to a colleague about an AI-powered hiring tool and how I felt like it was everywhere in my job hunt. My colleague, a veteran software engineer, was concerned. Not about the usage itself, but about the lack of transparency in the system's decision-making. "How do we know it isn't automatically filtering out candidates based on their zip code, or simply reinforcing historical biases in the data?" she asked (I’m paraphrasing, but that’s basically what she said; Lol)

That simple question, a blend of technical skepticism and ethical concern, perfectly captures why I'm strongly in favor of the European Union's new Artificial Intelligence Act (AI Act). It's the first comprehensive law of its kind, and while it's met some resistance, I see it as a necessary step to safeguard the public as AI moves from a cool tool to a critical part of our society.

Understanding the Risk-Based Framework

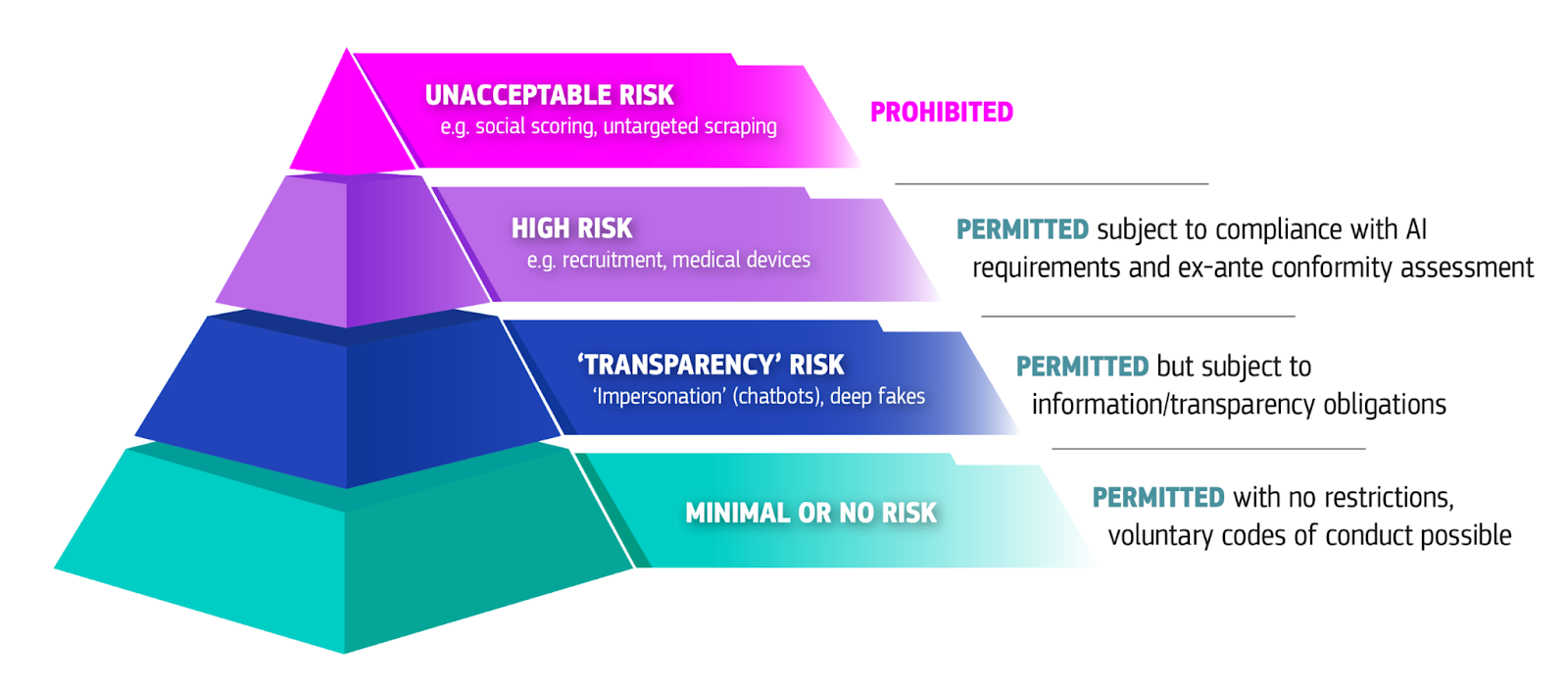

The EU AI Act is designed around this risk-based approach, which means the level of regulation an AI system faces directly relates to the harm it could potentially cause. I find this to be a very pragmatic way to regulate something as diverse as artificial intelligence. Not every AI application needs the same level of scrutiny.

The Four Risk Categories

The Act clearly defines four levels of risk, which dictates the compliance requirements for developers (or "providers" and "deployers" in the Act's language):

- Unacceptable Risk (Prohibited): These are AI systems that threaten fundamental rights and are outright banned. Examples include social scoring (classifying people based on social behavior), systems that manipulate behavior to cause harm, and real-time biometric identification in public spaces by law enforcement.

- High Risk (Strict Requirements): These systems pose a significant risk to health, safety, or fundamental rights. They are permitted but must comply with a full and rigorous set of requirements. High-risk areas include AI used in critical infrastructure (like transport), education (for assessing exams), employment (like CV-sorting software), and law enforcement.

- Limited Risk (Transparency Required): The main requirement here is transparency. People must be informed when they are interacting with an AI system, such as a chatbot. Providers of generative AI (like large language models) must also ensure their output is clearly labeled as AI-generated ("deepfakes" must be identifiable).

- Minimal/Low Risk (Free Use): Most AI systems, such as basic inventory management or automated invoice sorting, fall into this category and are permitted without significant additional regulation.

For the High-Risk systems, the obligations are extensive: establishing risk management systems, ensuring high-quality data to minimize bias, logging activity for traceability, maintaining detailed documentation, and implementing human oversight mechanisms.

Addressing Bias and Security Head-On

For me, the most important sections of the AI Act are those that directly tackle algorithmic bias and cybersecurity, which can be all too ubiquitous in news and computational journalism.

Mitigation of AI Bias

The Act is explicit about the need to control discrimination. For high-risk AI systems, the requirements center on data governance:

- Data Quality: Providers must use high-quality training, validation, and testing datasets that are relevant, sufficiently representative, and free of errors to the best extent possible. This is the bedrock of fairness; if your data is biased, your AI will be too.

- Bias Examination: Businesses must actively examine their datasets for possible biases that could negatively affect fundamental rights or lead to discrimination. They ALSO must take appropriate measures to prevent and mitigate any biases they identify. This transforms "fairness" from an abstract idea into a measurable requirement.

Mandates for Security and Robustness

The Act also treats security as a core function, not an afterthought. High-risk AI systems must be designed for accuracy, robustness, and cybersecurity. This means they must:

- Be Resilient: The systems must be as resilient as possible against errors, faults, or inconsistencies in the environment. This includes having technical redundancy solutions, like fail-safe plans.

- Counter Manipulation: High-risk AI must be resilient against attempts by unauthorized third parties to alter their use or performance by exploiting vulnerabilities. This covers AI-specific threats like data poisoning (where a bad actor manipulates the training data) or model poisoning.

To be honest, a large corporation that claims these basic measures (auditing their data for bias, ensuring their systems are secure against obvious attacks, and documenting their design) is too much of a burden is basically admitting that their existing practices are ethically questionable or technically negligent. If the system is making life-altering decisions (like denying a loan or a job), that level of governance shouldn't be optional.

Innovation vs. Regulation

A common argument I see online, particularly from business people/publishers, is that the AI Act will stifle innovation, especially for Small and Medium-sized Enterprises (SMEs). I agree, but that’s the point.

Yes, compliance is a financial and technical hurdle. SMEs, the EU defined as having fewer than 250 employees, have fewer resources than any global tech giant. The argument is that high compliance costs will delay product launches and make it harder for small companies to compete globally.

However, the act has built in mechanisms to try and mitigate this:

- AI Regulatory Sandboxes: These are controlled environments where companies, with priority access for SMEs, can test innovative AI systems for a limited time under regulatory supervision. This allows them to demonstrate compliance and work out the kinks without facing immediate fines, fostering innovation while ensuring safety.

- Proportionality in Fees: National authorities are required to ensure that conformity assessment fees for high-risk systems are proportional to the SME's size.

The regulation sets a baseline of user protection (user’s first!). If a small business's growth model relies on collecting and using sensitive user data without proper security, auditing, and human oversight, I would argue that the business model should be challenged. By getting compliant early, a company builds a reputation for trustworthiness, a massive competitive advantage in an era where trust is often compromised.

What Comes Next

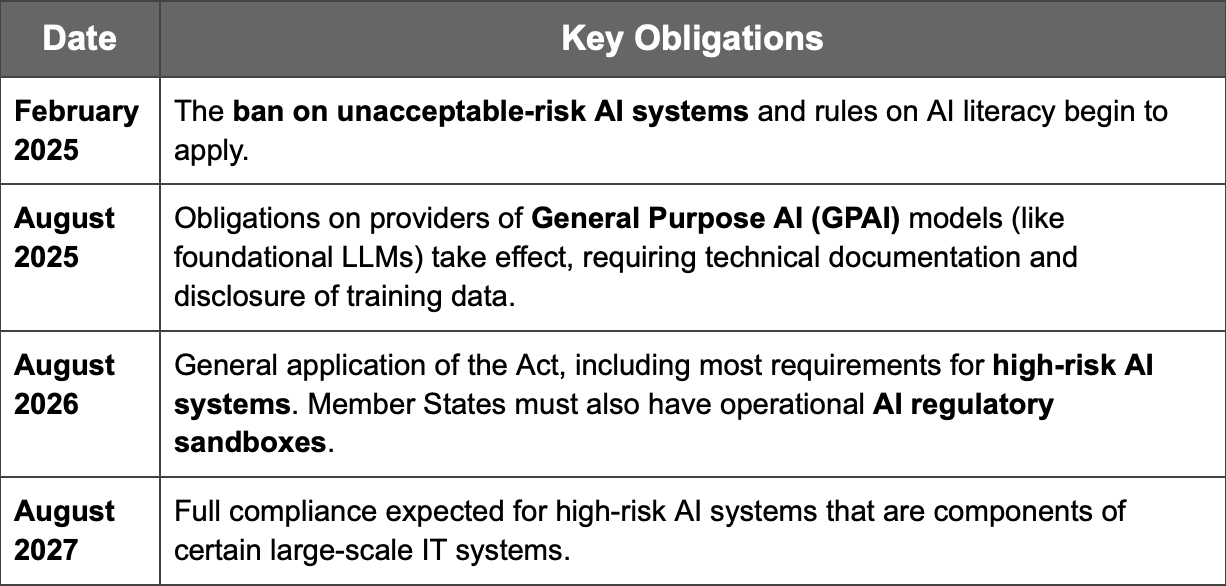

The enforcement of the AI Act has multiple milestones, which gives companies time to prepare. The law formally entered into force in August 2024, but the obligations are rolling out over the next few years:

These deadlines require every company operating in the EU, or selling to EU consumers, to start their AI model inventory and governance planning now. The fines for non-compliance are crazy, reaching up to €35 million or 7% of a company's total worldwide annual turnover. 💰

I believe this approach is a smart and balanced way to introduce a major regulatory change. It gives developers and deployers time to create these governance structures (that really should have been there all along).

How do you view this new global standard? As a developer or business owner, what specific compliance challenge feels most daunting, or alternatively, which user protection measure do you find most reassuring? I'm genuinely interested in hearing your perspective on balancing innovation with ethical responsibility.

Comments ()