The Plateau is Here: This is What's Next For AI

When ChatGPT launched, it felt like we'd just begun an exciting journey, racing towards an unknown horizon. Each new iteration of Large Language Models (LLMs) brought increasing capabilities, hinting at a future once only imagined in science fiction.

Yet, with recent releases, many of us have started to feel a sense of disappointment. The jumps in performance that once felt monumental have become incremental. It's a feeling that we have reached a plateau, and the smooth road has turned into a long, flat stretch of highway.

This feeling has led to a crucial question: if LLMs are no longer making those giant leaps, where are we actually going? The future of AI isn't about some distant, all-powerful intelligence, but rather the very real, practical, and sometimes messy work of integrating these tools into our lives!

The future of AI is not a single point on the horizon, but a constellation of different, interconnected developments. Here is a look at what I think is next for AI and why these developments matter.

Trend 1: The Rise of Agentic AI and Specialized Systems

The conversation around AI has, for a long time, been dominated by the idea of building a single, all-knowing "brain" (AGI for the knowing). We have been so focused on creating a perfect, general-purpose LLM that we have overlooked the most practical path forward: building systems that are highly specialized.

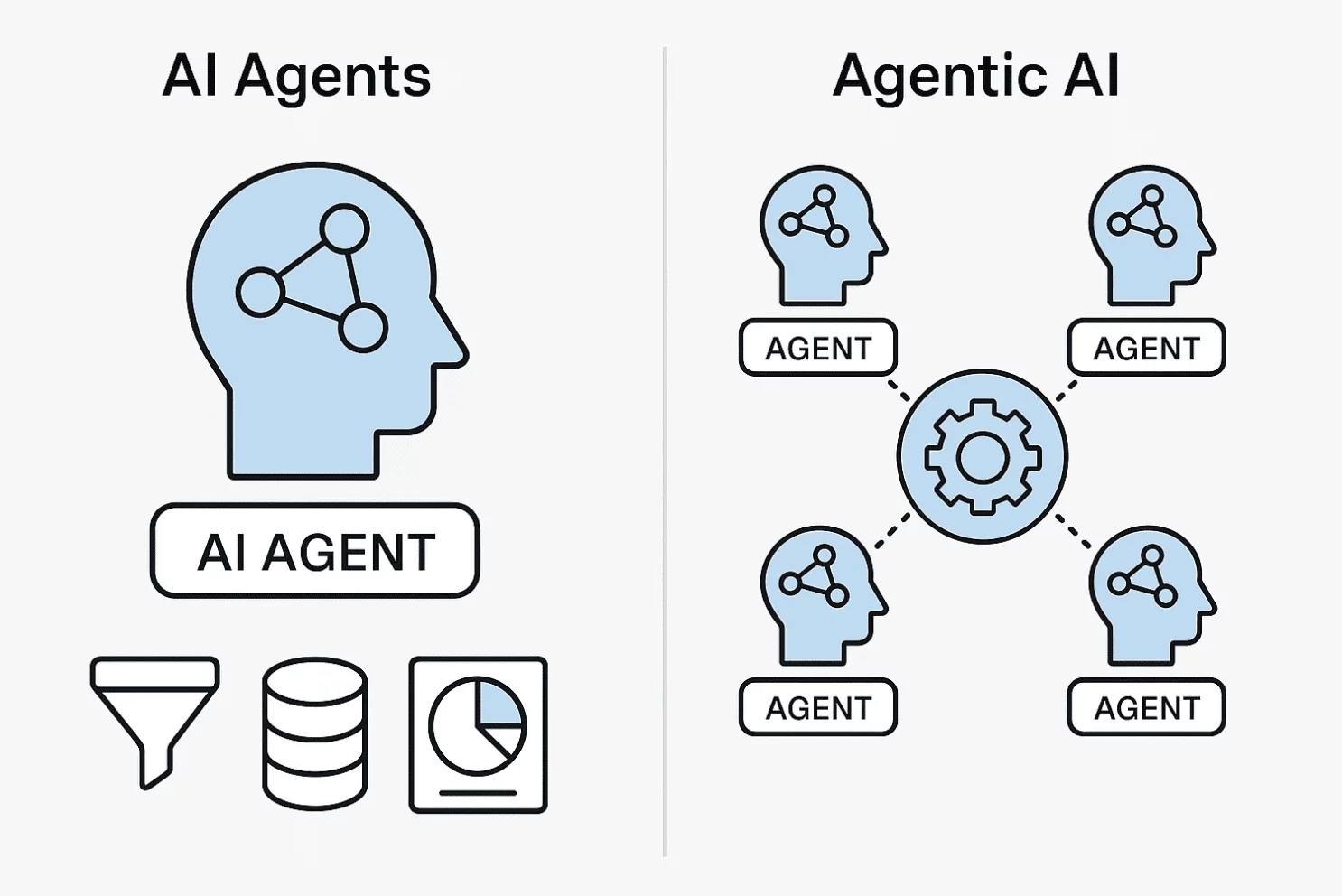

The next generation of AI will likely be defined by "agentic" systems. These are not just chatbots that answer questions; they are AI programs that can break down a complex task into smaller sub-tasks, use other tools to complete those sub-tasks, and then put everything together to achieve a larger goal.

Like imagine an AI designed specifically to help with scientific research. You might give it a prompt like, "Find a new compound to treat a specific disease and provide a research paper with your findings." The AI could then do the following:

- Search academic databases to understand the disease.

- Formulate hypotheses about potential compounds.

- Write and run code to simulate the chemical properties of those compounds.

- Gather the results, synthesize the data, and write a full research paper.

This kind of AI is less about raw intelligence and more about orchestration and execution. It's about giving a capable tool a clear goal and the ability to use other tools to get there. The future isn't about one giant brain, but about a network of smaller, highly capable specialists working together.

We already see it in play for public use in Gemini’s Research feature, where a ⭐phalanx⭐(word of the week) of agents come together to plan, research, and write about any topic you prompt it to.

Trend 2: The Influence of AI "Influencers" and Newsrooms

These changes in AI are happening at the same time as a major shift in how we get our information. Traditional journalism is under pressure from multiple place, and one of the most interesting is the rise of the AI "influencer." This can refer to a person who talks about AI, someone who uses AI-generated content, and even AI-generated personalities, in the media landscape.

These "AI influencers" can help and/or hurt journalism. On one hand, they can be used to create highly personalized content, summarize long articles, and generate headlines to increase engagement. Some newsrooms are already creating AI-driven audio versions of their articles to reach younger audiences who prefer listening over reading. These are practical, efficiency-focused applications that can help a struggling industry stay afloat.

However, there are plenty of downsides. AI-generated content can lack the nuance and deep understanding that comes from a human journalist. We are seeing a rise in "AI-slop" content that gets scraped by other AI models, leading to a kind of "model ouroboros" thing, where AI starts learning from its own low-quality output (which has been shown to produce lower and lower quality content, over time).

The rise of AI influencers also raises concerns about transparency. When an audience can't tell the difference between a human creator and an AI persona, it can erode the foundation of trust that journalism is built on. It forces us to ask tough questions about who or what we are listening to and what their true motives are.

Trend 3: The Infrastructure Era of AI

As the performance of core LLMs plateaus, the focus is shifting from building better models to building better systems around them. We are entering what many are calling the "infrastructure era" of AI.

This means we will see companies focusing on things like:

- Explainability: Creating AI systems that can show their work. Instead of just giving an answer, the AI will be able to show a transparent, step-by-step breakdown of how it arrived at its conclusion. This is vital for industries like medicine and finance, where trust and accountability are non-negotiable.

- Integration: Building AI that can seamlessly integrate with existing business workflows, databases, and software. The goal is no longer to make a stand-alone chatbot, but to create an AI that can work within a company's existing ecosystem, automating tasks and providing insights without needing a human to act as an intermediary.

- Specialized Models: Instead of one massive model for everything, companies are creating smaller, more efficient models trained on specific, high-quality data. A company might have a specialized AI model for legal documents, another for internal memos, and another for customer support, each one optimized for its unique task.

This shift from "model-first" to "infrastructure-first" AI is less glamorous than the promise of superintelligence, but it is far more practical. It is the necessary, foundational work that will make AI a reliable and trustworthy part of our professional and personal lives.

So, while the sci-fi dream of a singular, all-knowing AI may be fading, the reality that is emerging is perhaps more interesting. We are moving toward a future where AI is not a monolith, but a diverse ecosystem of specialized, integrated, and reliable tools. The conversation is no longer just about what AI can do, but about how we can effectively and ethically use it to solve real-world problems.

What do you think is the most exciting development in AI right now? Do you believe AI influencers will ever be a trusted source of news, or are we heading toward a future of even greater misinformation? I would love to hear your thoughts in the comments below.

Read more!

- Aschenbrenner, Leopold. "Situational Awareness." (June 2024).

- Brooks, Ethan. "The LLM Plateau: Why Language Models Aren't Getting Any Smarter and How GPT-5 Might Solve This." Medium. November 6, 2024.

- Pew Research Center. "Americans largely foresee AI having negative effects on news, journalists." April 2025.

- Reuters Institute for the Study of Journalism. "AI and Journalism: What's Next?" 2024.

- Kedziora, Jeremy. "AI expert says large language model development nearing plateau." WisBusiness. February 6, 2025.

- The Routledge Blog. "AI in the Media Industry: A Miracle or a Minefield?" April 17, 2025.

- Hacker News. "Ask HN: What's next for AI after LLM?" Discussion thread. February 2025.

Comments ()