The AI Editor: How to Automate Fact-Checking? (Part 1)

I remember a conversation I had with this journalist a few years ago. We were discussing about the firehose of information on social media, especially during a events like January 6th or the Norte Dame Cathedral fire. He sighed and said, "There are so many claims flying around, and we just don't have enough people or time to check them all. It feels like we're always running to catch up." That statement has stuck with me. In a world where a lie can circle the globe before the truth has tied its shoes, the pressure on news organizations to verify every statement is crazy, bordering on impossible.

This is where the cross between Artificial Intelligence (AI) and journalism becomes not just interesting, but essential. We have to stop thinking of AI as a replacement and start seeing it as an accelerator for the truth.

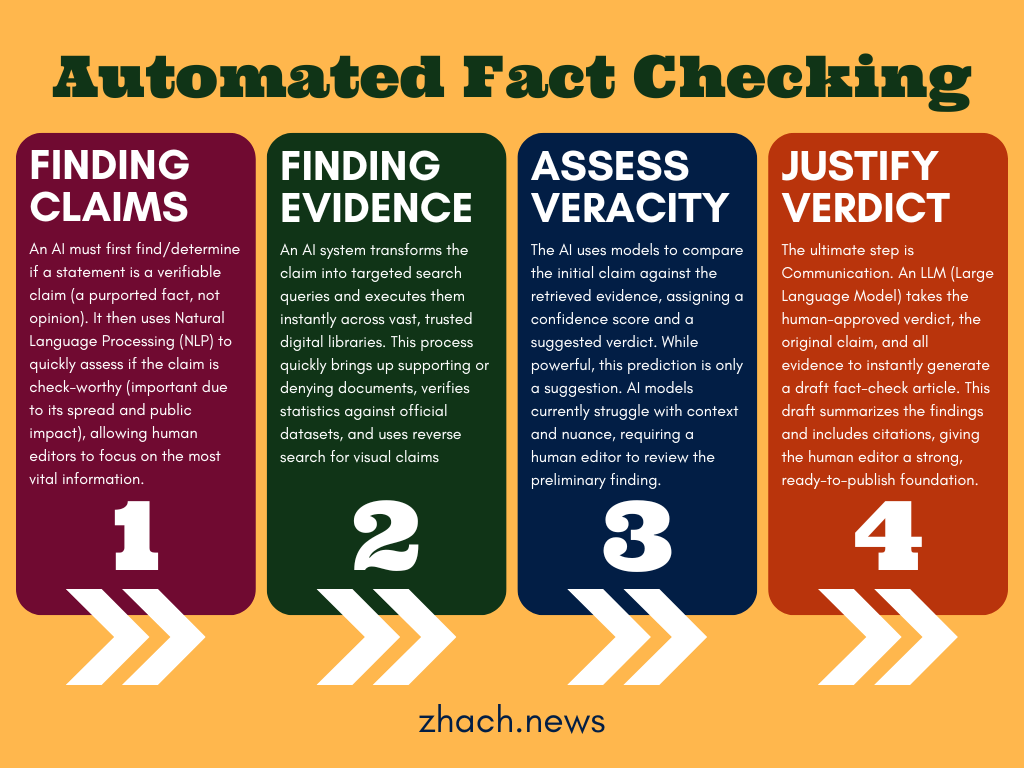

The manual process of fact-checking (a deeply human task of research and critical-thinking) is time-consuming. However, by breaking this process down into discrete, repeatable steps, we can introduce automation at critical points, transforming the work from a slow handmade craft into a more scalable industrial process. The goal isn't full automation, but powerful assistance.

Step 1: Finding Claims Worth Checking

This initial stage is the most critical and, arguably, the most technically difficult. Before we can check a fact, we must first identify it.

- Where do we find claims? Web Scrapers can plug its outputs to claim extraction models to pull out these “possibly false” statements.

- What is a Claim? A claim is a specific statement of fact about the real world that is verifiable. For example, "The new highway project will cost $5 billion" is a claim. "The highway project is terrible" is an opinion and cannot be fact-checked.

- Is it Check-Worthy? Not every verifiable statement needs a fact-check. An AI needs to quickly decide if a claim is important enough, or "check-worthy," to warrant resources. It does this by monitoring massive streams of content (social media, transcripts, news feeds) and using Natural Language Processing (NLP) models. These models look at factors like the virality (how fast and wide it's spreading), the public interest, and the potential societal impact of the claim. This process filters out millions of pieces of noise and presents human editors with a prioritized queue of what matters most right now. This ability to find and prioritize is the biggest time-saver for any newsroom.

Step 2: Finding Sources and Evidence

Once a claim is flagged, a human would usually start running searches to find supporting or denying documents. AI takes this research and supercharges it.

The automated system converts the claim into a series of search queries and then executes them across vast, curated libraries of trusted information—think government databases, academic archives, and established news sources. This is called evidence retrieval.

Check out my other article about some retrieval sources one could use: https://zhach.news/independent-reporters-playbook

For claims involving data or statistics, the AI can be trained to look up official public datasets and match the numbers directly. For visual claims, the system can deploy a reverse image search to find the original context of a photo or a video. AI can complete in seconds the deep background search that would take a journalist hours, instantly bringing the most relevant, often hidden, evidence to the forefront.

Step 3: Assessing Veracity and Suggesting a Verdict

With the claim and its supporting evidence in hand, the system moves toward a preliminary verdict. This is done through veracity prediction models, which compare the claim against the retrieved evidence and assign a confidence score or a suggested label (e.g., True, False, Misleading).

If multiple, highly credible sources retrieved in Step 2 all clearly contradict the claim, the system's confidence in a "False" verdict will be high.

It's vital to understand that this step remains a suggestion. Current AI models struggle with the subtleties of context, a key factor in accurate fact-checking. A quotation might be factually correct but intentionally misleading if taken out of a larger discussion. That judgment, which requires an understanding of human intent, language nuance, and current events, is where the human editor steps in to validate or override the AI's preliminary finding.

Step 4: Drafting the Fact-Check Article

The final stage is communication. A good fact-check doesn't just give a verdict; it explains why that verdict was reached.

A Large Language Model (LLM) can take the human-approved verdict, the original claim, and all the sources retrieved, and generate a draft article. This draft can summarize the core facts, outline the conflicting evidence, and clearly state the conclusion. It creates a solid foundation, complete with citations, allowing the human editor to polish the prose, ensure the tone is educational, and add the necessary contextual detail that makes the debunking article effective.

The true value of automated fact-checking is the speed and efficiency it introduces at every point of this pipeline, freeing up human expertise for the critical work of judgment and storytelling.

What Do You Prioritize in the Race for Speed? ⏱️

This shift towards automation is about increasing productivity, but it introduces trade-offs between speed and thoroughness.

If you were a fact-checker with limited time, which of these automated capabilities would you want your AI assistant to prioritize first?

- Claim Prioritization: The AI tells you what to check first based on its potential impact.

- Evidence Retrieval: The AI instantly delivers all relevant background sources you need to read.

What do you think?

By the way, a lot of research here was supported by this Github page of information. So thank you @Cartus for such a robust set of information!

Comments ()